Last weekend, I woke up to the following news in my inbox:

The news, published in The Information, a newsletter widely read across the tech industry, stated that while the number of ChatGPT users continues to grow, the rate of improvement in the product seems to be slowing down. Unlike conventional tech coverage, The Information focuses on the business side of technology, uncovering trends, strategies, and insider details about the leading companies and players shaping the digital world. For those outside the industry, think of it as a privileged guide to understanding how technology impacts the economy, innovation, and daily life. It’s like a specialized journalistic lens examining the intersection of business and technology.

I reached out to Gary Marcus because, back in March 2022, he published an article in Nautilus — a magazine read by tech professionals that blends science, philosophy, and culture — about this very topic. His article, “Deep Learning is Hitting a Wall”, stirred significant controversy. Sam Altman insinuated (without naming him directly but referencing imagery from the article) that Gary was a “mediocre skeptic”. Greg Brockman openly mocked the title, and Yann LeCun claimed that deep learning wasn’t hitting a wall, and so on.

The core argument of Marcus’s article was that simply scaling models — increasing their size, complexity, or computational capacity to improve performance — would not solve hallucinations or abstraction problems.

Marcus responded to me saying, “I’ve been warning about the fundamental limits of traditional neural network approaches since 2001”. That was the year he published The Algebraic Mind, where he first described the concept of hallucinations. He amplified these warnings in Rebooting AI (which I discussed last year in articles available on Medium and Substack) and again in his most recent book, Taming Silicon Valley.

A few days ago, Marc Andreessen, co-founder of one of the top tech-focused venture capital firms, began revealing details about some of his AI investments. In a podcast (reported by The Information and others), Andreessen said, “We’re scaling [graphics processing units] at the same rate, but we haven’t seen any further improvement or increase in intelligence”. Essentially, he was echoing the sentiment that “deep learning is hitting a wall”.

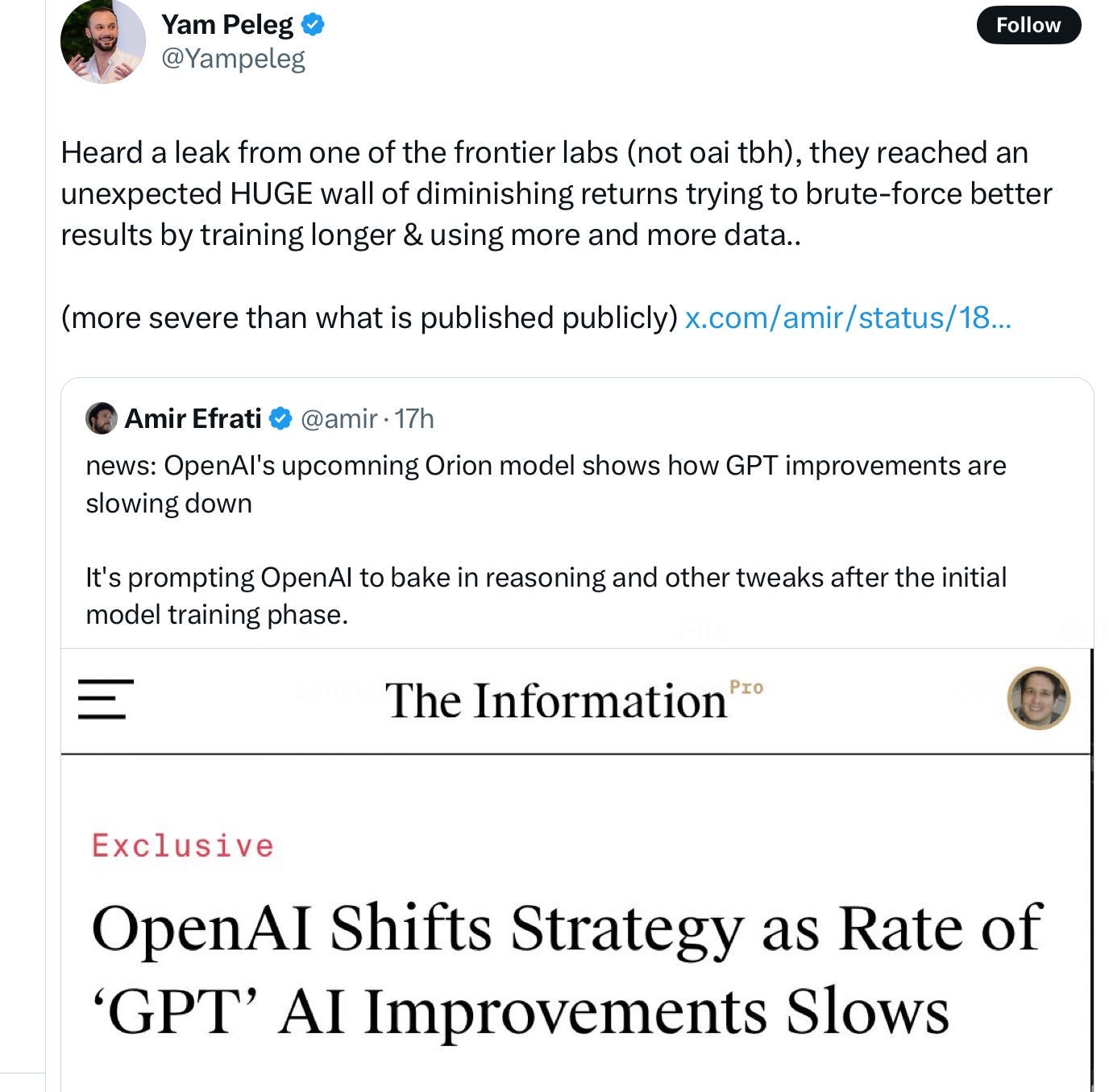

The day after the first message, Gary sent me the following screenshot with a note: “It’s not just OpenAI — there’s a second major company converging toward the same conclusion”:

The tweet was from Yam Peleg, a data scientist and machine learning expert known for his contributions to open-source projects. In it, Peleg claimed to have heard rumors that another major lab (unnamed) had also reached the point of diminishing returns. While still a rumor (though plausible), if true, it signals stormy skies ahead.

We may even witness an AI equivalent of a bank run — where a large number of customers simultaneously withdraw deposits out of fear of a bank’s insolvency.

The issue is that scaling models has always been a hypothesis. What happens if, suddenly, people lose faith in that hypothesis?

It’s crucial to clarify that even if enthusiasm for generative AI wanes and tech company stock prices plummet, AI and LLMs won’t disappear. They’ll still have a secure place as tools for statistical approximation. However, that place might be smaller, and it’s entirely possible that LLMs alone won’t meet last year’s expectations as the path to AGI (Artificial General Intelligence) or the so-called “singularity” of AI.

A reliable AI is certainly achievable, but we’ll need to return to the drawing board to get there.